In today’s world of artificial intelligence (AI) that awes with its predictive capacities and deep learning tricks, one might conclude that it has already changed the face of medicine. Unfortunately, as Dr. Kacper Sokol and his team so insightfully discuss in his perspective piece in the journal npj Digital Medicine (2025), this is anything but true. The truth is that since AI performs tasks with superhuman accuracy on standardized sets of data, most AI models are still stuck in research laboratories instead of hospitals.

Their essay is at the same time critique and road map—recommending how to construct AI systems that think with doctors rather than thinking for them.

From Superhuman to Super-Supportive

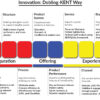

Sokol, Fackler, and Vogt blow apart the myth that the aim of medical AI is to fool physicians. They propose instead that there be a sociotechnical and cognitive collaboration—that AI be supplementary to rather than substitutive of physician cognition. They illustrate how data-driven technologies must integrate with the physician’s mental models and decision environment—perception to inference to uncertainty to empathy.

As quoted from Eric Topol’s High-Performance Medicine, “The final goal of AI is not substituting people with physicians; rather, it restores ‘human touch’ so that “physicians are free to do what only people can do—care.’” Of India’s own quotes that are apt here would be that of Dr. A. P. J. Abul Kalam, “Science is a beautiful gift to humanity; we should not distort it.” The science here is about augmentation.

The Parable Of The Two Patients

To convey the shortcomings of artificial intelligence, the article mentions an example from a thought experiment that illustrates how two patients with one improving and one deteriorating might produce the same data point in a static model. A superficial artificial intelligence would produce the same result for each one without respecting the difference in time. This story encapsulates one important reality about life. Life is process-based, not data-based.

The need, therefore, is to introduce “temporal-aware” AI that provides models about the “when” rather than the “what.” In this respect, the “temporal wisdom” suggested here resonates with the Upanishadic “Kala Jnana”-vision that goes beyond the “now” to apprehend emergent processes

Sepsis as a Mirror

The case study in the article—paediatric sepsis—symbolically illustrates the struggle that medicine has with complexity. This has been an area that has been poorly understood and has caused significant mortality to this day, even after many research attempts. They have predictive models that are still unexplored to any large extent in medical settings. What’s the reason?

This is reflected in the Indian fable about the blind men talking about the elephant—each has a piece of the truth, but no one has the whole picture. Similarly, AI with incomplete data has the possibility of formulating incomplete truths.

Advantages: Promise of Partnership

A human-centered model of AI presented by the authors has various advantages:

- Cognitive Support – The capability to point out blind spots, resist anchoring bias, and enable “what if” simulations aids in improving diagnostic thinking.

- Consistency in Decision Making – Reducing “decision noise” with AI can eliminate variations in treatments among different people.

- “Training & Reflection”-Simulation-based AI may act as a “digital twin” mentor to enable doctors to simulate challenging decisions.

- Hybrid Intelligence – The combination of human intuition and algorithmic computation leads to creativity and confidence in critical care.

- In essence, AI technology becomes the mirror to human cognition that shows them mistakes softly and leads them to improve—a digital version of the Guru.

Cons: The Caveats of Over-Automation

But the authors advise that there must be no techno-utopianism either. The overdependence on complex models might lead to automation bias—when healthcare professionals blindly trust results from those models. To make matters worse, biased data can be perpetually discriminatory, such as was evident in various international scandals involving models that pitted minorities and women against each other. Philosopher L. Munn refers to this problem as the “myth of automation”.

Furthermore, if such understanding is absent in the development of AI technology, it may end up overburdening medical personnel with additional clicks to press or data requirements that must be met. In India’s resource-constrained hospitals and settings, where empathy fills the gap that technology misses—that is, in environments with less technology—ineffective use of AI technology may accentuate rather than alleviate medical frustrations

Bridging the Translational Gap

The key takeaway here is that the barrier is sociotechnical rather than purely technical. To get beyond the barrier means humility—designing AI that must fit within the ecological niche that includes rounds, protocols, affects, and values. A successful AI system would function more like a smart colleague Than an Oracle.

The new notion of ante-hoc interpretability—designing models that are inherently interpretable—are the antithesis of post-hoc “explainability.” As data scientist Cynthia Rudin has pointed out, black box models are unacceptable in critical areas such as medicine. Trust is built with transparency, not opacity.

Lessons from East and West

From an international perspective, this vision reiterated in Atul Gawande’s essay entitled “Why Doctors Hate Their Computers,” in which Atul Gawande bemoaned the use of technology that disturbs the flow process rather than helping it. Then again, “The machine should not be the master of man” expressed in the words of Mahatma Gandhi conveys the same ideology.

In rural Indian hospitals, where doctors are managing dozens of patients side by side, the potential impact of AI that points to sepsis risk factors or improves use rates of antibiotics would be revolutionary. But as the authors correctly point out, the key to success is “intelligence” rather than “genius,” meaning adoption fidelity.

A New Paradigm – AI as a Cognitive Partner

“Picture an AI dashboard that can do more than just predict mortality rates,” writes Silberman. “It might pose questions such as these to:

“Are you anchoring on the first lab result?”

“Have you considered an atypical presentation?”

Instead, it becomes more of a “cognitive coach” and less of a “calculator.”

But this is perhaps the real edge that digital medicine has to offer—the movement from machine learning to machine teaching. “It highlights,” writes Chris Berardi in “Machine Teaching,” “what can be referred to as hybrid intelligence,” after the definition by Sokol et al.: “a collaboration between human intelligence and artificial intelligence

Conclusion: From Benchmarks to Bedside

As the authors conclude, “A modest AI that ‘just works’ can be more valuable to clinicians’ work than ‘sexy’ AI that exists only as models in the lab.” Indeed, this paper is to be applauded for positioning AI as the helper rather than the threat to human intelligence.

Or, in Tagore’s timeless words:

“The highest education is that which does not merely give us information but makes our life in harmony with all existence.”

Similarly, the best artificial intelligence is not that which forecasts forever successfully but rather that which integrates with human intelligence—to enable healers to heal better.

Acknowledgment: Heartfelt thanks to Dr. Kacper Sokol, Dr. James Fackler, and Dr. Julia E. Vogt, and to the journal npj Digital Medicine that accepted this thought-provoking article to redefine the use of AI in medicine from autonomous computing to compassionate collaboration.

Dr. Prahlada N.B

MBBS (JJMMC), MS (PGIMER, Chandigarh).

MBA in Healthcare & Hospital Management (BITS, Pilani),

Postgraduate Certificate in Technology Leadership and Innovation (MIT, USA)

Executive Programme in Strategic Management (IIM, Lucknow)

Senior Management Programme in Healthcare Management (IIM, Kozhikode)

Advanced Certificate in AI for Digital Health and Imaging Program (IISc, Bengaluru).

Senior Professor and former Head,

Department of ENT-Head & Neck Surgery, Skull Base Surgery, Cochlear Implant Surgery.

Basaveshwara Medical College & Hospital, Chitradurga, Karnataka, India.

My Vision: I don’t want to be a genius. I want to be a person with a bundle of experience.

My Mission: Help others achieve their life’s objectives in my presence or absence!

My Values: Creating value for others.

Reference:

Leave a reply

Dear Dr. Prahlada N. B Sir,

With your article, "AI in Medicine: From Superhuman Myths to Human-Centric Wisdom," I must say it's truly inspiring to see how you're bridging the gap between AI and medical expertise.

Your emphasis on integrating AI with doctors' intelligence, rather than replacing it, resonates deeply. By combining human intuition with algorithmic computation, we can indeed create a hybrid intelligence that enhances patient care.

The idea of AI as a "cognitive coach" rather than a calculator is particularly noteworthy. It's about designing AI systems that support clinicians, providing them with valuable insights and suggestions, rather than overwhelming them with data.

Your vision of AI augmenting human intelligence, rather than replacing it, is the way forward. I'm excited to see how your work will continue to shape the future of healthcare.

Thank you for sharing your expertise and insights. Your contributions to the field of medicine are truly making a difference.

Reply