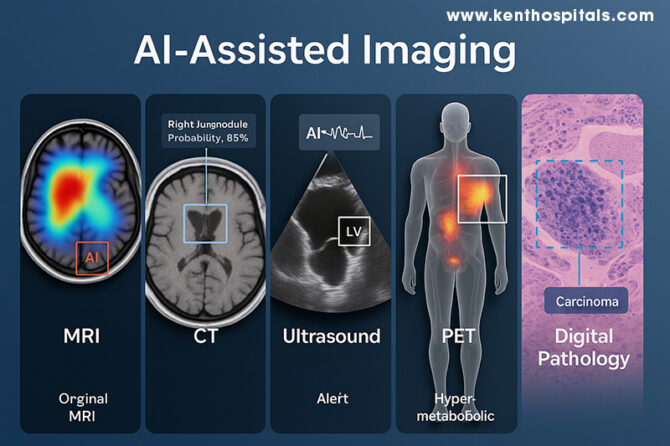

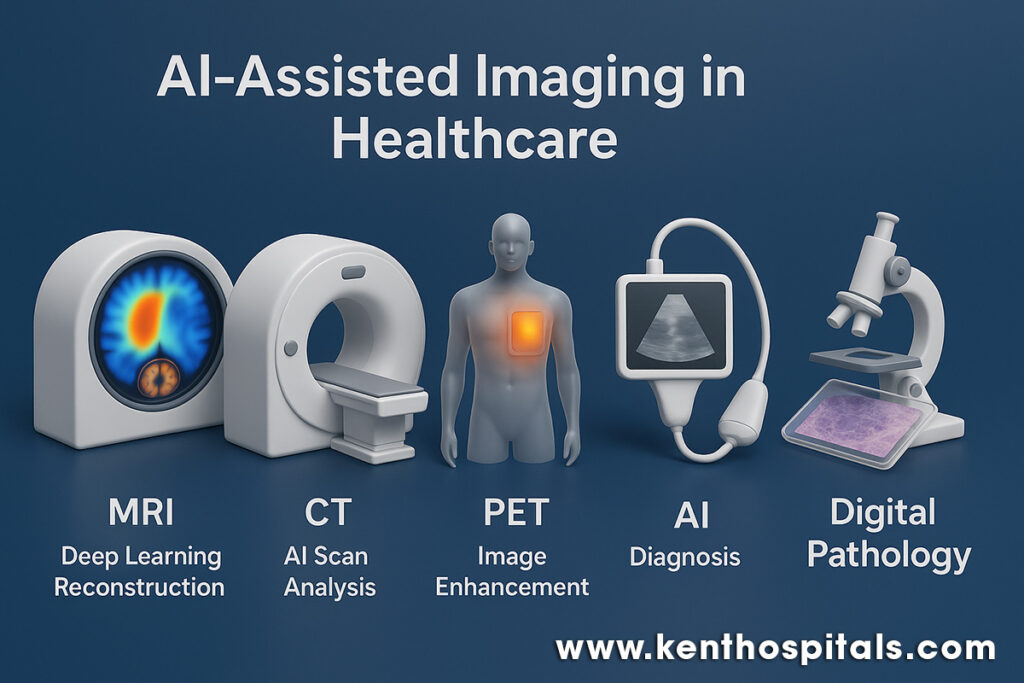

In modern medicine, artificial intelligence (AI) is redefining the way clinicians see and interpret the human body. Just as a lighthouse helps ships navigate in thick fog, AI points out details in medical images that might otherwise go undetected. The notion of AI-augmented imaging extends across modalities—magnetic resonance imaging (MRI), computed tomography (CT), ultrasound, positron emission tomography (PET), and digital pathology—where each modality is enriched by machine learning algorithms supplementing the expertise of humans. The evolution is not only technological; it is transforming diagnostic processes, patient results, and the very nature of clinical decision-making.

In MRI and CT, AI has made notable strides through deep learning–based tomographic reconstruction, enabling high-quality images from under-sampled data. This reduces scan time and, in CT, lowers radiation exposure without compromising diagnostic performance (1). The analogy is akin to a sculptor chiselling away excess stone to reveal the form within—the neural network discards noise and reconstructs the essential detail. Advanced convolutional neural networks (CNNs) are now capable of detecting patterns imperceptible to the naked eye, improving both sensitivity and specificity in detecting intracranial and thoracic abnormalities (6). By learning from millions of prior images, these systems act like apprentices who have watched countless masters at work, now capable of spotting the faintest flaw in a flawless embroidery.

PET imaging, particularly in hybrid PET/MRI scanners, is equally enriched by AI. The combination of functional and anatomical information permits localisation of hyper-metabolic areas with precision, essential in oncology, neurology, and cardiology (3,4). The contribution of AI is not only in segmentation and quantitation but in denoising and deblurring, improving image clarity and reproducibility (2). This is analogous to tuning the radio to reduce static so a weak but essential melody becomes audible. PET’s functional information, refined by AI, can assist in early intervention and more accurate therapy planning.

Ultrasound, by contrast, is a dynamic operator-based modality where real-time interpretation is feasible. AI also offers consistency and alertness, with automatic detection of cardiac structures such as the left ventricle and flagging of motion activity to reveal dysfunction (13). In clinical research, AI-augmented echocardiographic interpretation has enhanced reproducibility of measurement and decreased interpretation time without accuracy reduction (12,13). The net effect is essentially having a second expert in the room—albeit one who never becomes fatigued, never closes their eyes, and can instantly indicate subtle departures.

Digital pathology is also being transformed in parallel. Whole-slide images in high resolution are of massive size and are tedious to review manually. AI can automatically screen such images and point suspicious regions, such as foci of carcinoma, for attention by the pathologist (7). A recent meta-analysis determined that AI systems in digital pathology had pooled sensitivity of 96.3% and specificity of 93.3% in multiple tissue types and equalled expert human performance (7). To pathologists with escalating complexity in each case, this is the equivalent of a pre-marked guide to the location of rare forest creatures after a close look at a map of the dense forest. Triage of this kind can increase efficiency without compromising the essential position of the human element of judgment.

The future lies in harmonizing these modalities into a single diagnostic narrative. Multi-modal medical image fusion, supported by deep learning, integrates complementary data from MRI, CT, PET, and other sources to enhance lesion detection, segmentation, and characterization (5). In precision oncology, combining radiological images with histopathological and genomic data—a field known as radio-genomics—yields a richer understanding of disease biology (11). This orchestration of data sources is like a symphony in which each instrument contributes a distinct voice, but together they create a more complete and compelling piece. A new direction in this area is the development of radiomics, where image-based quantitative features can foretell prognosis, response to treatment, and molecular properties (10). Such features are akin to a coded language inherent in the image, allowing for information layers beyond the perceivable. A major ongoing challenge remains the so-called “black-box” nature of most AI algorithms. Lacking transparency, clinicians are unwilling to trust or make decisions based on AI outputs (9). Explainable AI methods are under development to address this shortcoming and supply the system’s decisions with a rationale in the form of images or text. This development is necessitated by the necessity for trust in AI to be earned by transparency as well as by accuracy.

While promising, AI-assisted imaging is not without challenges. Data quality and representativeness are critical to prevent algorithmic bias, which could perpetuate inequities in health (6,11). Regulatory approval remains under development and rigorous clinical validation must precede wide adoption. In addition, incorporation into practice demands close collaboration between developers and physicians; algorithms are needed to be designed with real-world practice in consideration. As with any tool, the usefulness of AI depends upon the experience and judgment of the user.

A descriptive anecdote illustrates this collaboration: a harried senior pathologist scanning dozens of prostate biopsies daily for malignancy can spend hours doing so. With pre-screening by AI, regions of interest are highlighted in a matter of seconds, and the pathologist can spend attention on confirmation and subtlety of interpretation. Not that the AI “diagnoses the case,” but rather facilitates decision-making by humans in expediting and refining accuracy. That collaboration is where the real potential of AI lies—not in replacement, but in complementing. As one radiologist has recently noted, “AI is our new diagnostic ally … it works best in human hands” (6). The sentiment sums up the use of AI in imaging: a multiplier of human expertise, a protector against overlooking, and a path to greater insights. Ultimately, the aim is not the substitution of the physician’s eye but the adding of new dimensions to it—permitting earlier detection, more precise classification, and better-matched treatments. Ultimately, AI-assisted imaging is not the magician’s wand but the magnifying glass, making visible things previously invisible, and enabling the clinician to move with increased speed, confidence, and precision.

Learn more about the Role of AI & ML in Imaging in my book:

Dr. Prahlada N.B

MBBS (JJMMC), MS (PGIMER, Chandigarh).

MBA in Healthcare & Hospital Management (BITS, Pilani),

Postgraduate Certificate in Technology Leadership and Innovation (MIT, USA)

Executive Programme in Strategic Management (IIM, Lucknow)

Senior Management Programme in Healthcare Management (IIM, Kozhikode)

Advanced Certificate in AI for Digital Health and Imaging Program (IISc, Bengaluru).

Senior Professor and former Head,

Department of ENT-Head & Neck Surgery, Skull Base Surgery, Cochlear Implant Surgery.

Basaveshwara Medical College & Hospital, Chitradurga, Karnataka, India.

My Vision: I don’t want to be a genius. I want to be a person with a bundle of experience.

My Mission: Help others achieve their life’s objectives in my presence or absence!

My Values: Creating value for others.

References:

- Wang G, Ye JC, De Man B. Deep learning for tomographic image reconstruction. Nat Mach Intell. 2020;2(12):737-48. doi:10.1038/s42256-020-00273-z.

- Balaji V, Song TA, Malekzadeh M, Heidari P, Dutta J. Artificial intelligence for PET and SPECT image enhancement. J Nucl Med. 2024;65(1):4-12. doi:10.2967/jnumed.122.265000.

- Lee J, Choi JY, Lee JS. Current trends and applications of PET/MRI hybrid imaging in dementia. J Clin Med. 2024;13(2):374. doi:10.3390/jcm13020374.

- Kohan A, et al. Current applications of PET/MR: Part I technical basics & Part II clinical applications. Can Assoc Radiol J. 2024;75(6):558-77, 568-82. doi:10.1177/08465371241255903 / 10.1177/08465371241255904.

- Li Y, Chen X, Zhao Y, et al. A review of deep learning-based information fusion for multimodal medical images. Comput Biol Med. 2024;176:108046. doi:10.1016/j.compbiomed.2024.108046.

- Obuchowicz R. Artificial Intelligence-Empowered Radiology—Current status, opportunities, and challenges. Diagnostics (Basel). 2025;15(3):282. doi:10.3390/diagnostics15030282.

- McGenity C, Clarke EL, Jennings C, et al. Artificial intelligence in digital pathology: a diagnostic test accuracy systematic review and meta-analysis. npj Digit Med. 2024;7:66. doi:10.1038/s41746-024-01106-8.

- Pinto-Coelho L. How artificial intelligence is shaping medical imaging technology: a survey of innovations and applications. Bioengineering. 2023;10(12):1435. doi:10.3390/bioengineering10121435.

- Muhammad D, et al. Unveiling the black box: a systematic review of explainable AI in medical image analysis. Vis Inform. 2024;8(4):100186. doi:10.1016/j.visinf.2024.100186.

- Horvat N, et al. Radiomics beyond the hype: toward clinical translation. Radiol Artif Intell. 2024;6(3):e230437. doi:10.1148/ryai.230437.

- Lu C, Zhang J, Liu R. Deep learning-based image classification for integrating pathology and radiology in AI-assisted medical imaging. Sci Rep. 2025;15:27029. doi:10.1038/s41598-025-07883-w.

- Chen M, Zhou B, Chai P, et al. Impact of human–AI collaboration on image-interpretation workload: systematic review and meta-analysis. npj Digit Med. 2024;7:194. doi:10.1038/s41746-024-01328-w.

- Lau ES, et al. Deep learning–enabled assessment of left heart structure and function from echocardiography. J Am Coll Cardiol Adv. 2023;2(11):100289. doi:10.1016/j.jacadv.2023.100289.

*Dear Dr. Prahlada N. B Sir,*

Your blog post on "AI-Aided Imaging: The Point of Intersection Between Machine Intelligence and Medical Sight" is a masterful blend of technology and medicine, illuminating the transformative power of artificial intelligence in medical imaging. Your writing is akin to a skilled surgeon’s precise movements, delicately dissecting complex concepts and presenting them with clarity and insight.

*The Lighthouse Analogy* :

Your comparison of AI to a lighthouse guiding ships through thick fog is particularly apt. Just as the lighthouse beam cuts through darkness, AI-aided imaging illuminates subtle patterns and anomalies in medical images, enabling clinicians to navigate complex diagnoses with greater confidence.

*The Sculptor’s Art* :

Your description of deep learning–based tomographic reconstruction as akin to a sculptor chiseling away excess stone to reveal the form within is a beautiful metaphor. This process, much like the sculptor’s art, refines and reveals the essential details, discarding noise and unnecessary data.

*The Apprentice’s Expertise* :

The analogy of AI systems acting like apprentices who have watched countless masters at work is also noteworthy. By learning from millions of prior images, these systems develop expertise in detecting patterns imperceptible to the naked eye, much like a skilled craftsman develops a keen eye for detail through years of practice.

*Collaboration, Not Replacement* :

Your emphasis on the collaborative nature of AI-assisted imaging is crucial. AI is not a replacement for human expertise but rather a powerful tool that complements and enhances clinical decision-making. The partnership between AI and clinicians is akin to a skilled musician working with a talented accompanist, creating a harmonious and effective performance.

*The Future of Medical Imaging* :

Your discussion of the future directions in AI-assisted imaging, including multi-modal medical image fusion, radio-genomics, and radiomics, is both exciting and promising. These advancements have the potential to revolutionize medical imaging, enabling clinicians to diagnose and treat diseases more effectively.

*Thank you Sir, for sharing your insights and expertise on this critical topic. Your writing has shed light on the vast potential of AI-assisted imaging, and we look forward to seeing the continued evolution of this field.*

Reply