For years, conventional wisdom in radiology has been that AI would supplement radiologists, bringing machine accuracy to human judgment. The assumptions made sense: AI would detect tiny details, measure with absolute reproducibility, and support human decisions. But as recent evidence illustrates, AI integration into radiologists’ workflow does not provide anticipated benefits. Indeed, putting human intuition together with AI recommendation introduces cognitive fallacies—the first one being automation neglect (overlooking correct AI output) and automation bias (over-trusting incorrect AI).

Dr. Pranav Rajpurkar and Dr. Eric Topol argue in July 2025’s Radiology that it’s time to rethink the overall model. Instead of a hybrid “assistive” model, they propose splitting the role into specific, mutually independent responsibilities that are assigned to AI systems and to radiologists in order to reduce bias, increase reliability, and drive ultimate efficiency.

The Problem With Modern AI Assistance Models

This type of assistive AI methodology typically entails AI generating an analysis first, which is then checked by a radiologist. However, if AI and radiologists disagree, studies have found that, as previously discussed, in most instances, AI is ignored even by radiologists—where AI is correct. Agarwal et al. demonstrated this to happen with chest radiograph interpretation, and an incorrect prompt from AI in mammography decreased diagnostic accuracy from 82.3% to 45.5%

As Dr. Topol notes, “The challenge is not just making AI accurate—it’s making human-AI interaction safe and effective.”

The Case for Role Separation

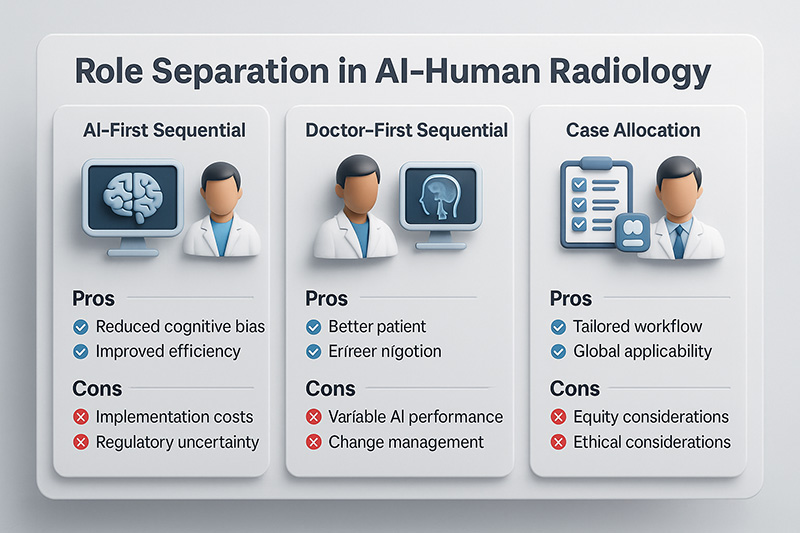

Role differentiation exploits the respective strengths of each party and reduces cognitive interference. Three models are proposed by the authors:

AI-First Sequential Model – AI performs a first, automatable step before work by the radiologist.

Example: Bhayana et al. used GPT-4 to generate CT requisition histories from clinical notes. The AI-generated histories contained more critical parameters (e.g., primary oncologic diagnosis 99.5% vs. 89%) and were preferred by radiologists 89% of the time.

Doctor-First Sequential Model – Radiologists interpret first; AI contributes value afterwards by summarizing reports in structured formats or generating user-friendly outputs for patients.

For instance, Zhang et al.’s big language model had high precision (84%) in converting radiologists’ reports into structured impressions, whereas Luo et al.’s ReXplain generated videos with automated explanations for patients.

Case Allocation Model – Cases are directed to AI, humans, or both depending on attributes like complexity or risk.

For example, during breast cancer screening, AI-based triage used by the MASAI trial increased detection of cancers by 29% and reduced workload by 44%.

Advantages if Role Division

- Less Cognitive Bias – Reduces the push-pull factor in determining whether to trust AI in every case.

- Enhanced Efficiency – AI will process routine or low-risk cases, leaving the complex work to radiologists.

- Improved Patient Reporting – Doctor-first approaches such as ReXplain are able to scale personalized reporting without taxing clinicians.

- Earlier Diagnosis – Risk-based allocation, like in MASAI, facilitates earlier identification of clinically significant cancers.

- Customized Workflow – Practices may select the separation model most suited to their specialty, resources, and regulatory environment.

Dr. Rajpurkar encapsulates the appeal: “optimal results do not come from combining every activity—often they come from recognizing how to separate activities.”

Costs and Challenges

- Implementation Costs – To integrate AI role-separation models, infrastructure enhancements, workflow rethinking, and personnel training are necessary.

- Regulatory Uncertainty – Autonomous AI use leads to liability concerns; Saenz et al. commented that responsibility can devolve from physicians to AI firms.

- Variable AI Performance – Fragmented health record data can still confound AI; Johri et al. showed accuracy drops when AI reasons across disjointed information.

- Change Management – Radiologists may resist workflow changes, particularly if they perceive threats to their autonomy.

- Equity Considerations – Availability of sophisticated AI tools might be restricted to affluent institutions, presenting the risk of a digital divide in diagnostic medicine.

Global Examples and Perspectives

Europe – ChestLink (Oxipit) reports independently normal chest radiographs throughout the European Economic Area, demonstrating regulatory endorsement in certain scenarios.

United States – FDA-approval for DERM in dermatology and AEYE-DS in ophthalmology have had higher negative predictive values for excluding disease than human experts.

Low-Resource Environments – AI-first approaches might accelerate pre-read triage where shortages of radiologists cause delayed care, but judicious in-country validation will be important.

Balancing Innovation and Responsibility

They also warn that complete automation or fully integrated help is not ideal. Perhaps the future lies with flexible, hybrid configurations—with, say, AI-first history generation, doctor-first interpretation, and AI-driven triage of routine cases.

New “generalist medical AI” programs like MedVersa promise broader deployment, able to interpret variable scans, author reports, and measure anatomy. But Rajpurkar and Topol propose subjecting AI to a “clinical certification pathway,” like medical training—starting from limited responsibility and graduated increases as systems prove safe under close supervision.

As Dr. Topol explains, “Medicine is about trust. AI must earn that trust the same way humans do—through training, supervision, and performance.”

The shift toward role delineation in AI–human radiology is not purely technical—it’s philosophical. It recognizes AI and humans have strengths and limitations and that workflow design must leverage the best of both without introducing novel risks. Progress will need evidence-based deployment, flexible models, and judicious consideration of laws and ethics. While radiology and other specialties embrace increasing complex AI, experience from functional separation might yet advise the broader medical world: sometimes the wisest collaborating is knowing how to work together—and how not to.

Dr. Prahlada N.B

MBBS (JJMMC), MS (PGIMER, Chandigarh).

MBA in Healthcare & Hospital Management (BITS, Pilani),

Postgraduate Certificate in Technology Leadership and Innovation (MIT, USA)

Executive Programme in Strategic Management (IIM, Lucknow)

Senior Management Programme in Healthcare Management (IIM, Kozhikode)

Advanced Certificate in AI for Digital Health and Imaging Program (IISc, Bengaluru).

Senior Professor and former Head,

Department of ENT-Head & Neck Surgery, Skull Base Surgery, Cochlear Implant Surgery.

Basaveshwara Medical College & Hospital, Chitradurga, Karnataka, India.

My Vision: I don’t want to be a genius. I want to be a person with a bundle of experience.

My Mission: Help others achieve their life’s objectives in my presence or absence!

My Values: Creating value for others.

References:

https://pubs.rsna.org/doi/full/10.1148/radiol.250477

Leave a reply

Dear Dr. Prahlada N.B Sir,

I thoroughly enjoyed reading your blog post on "Beyond Assistance: Rethinking AI–Human Collaboration in Radiology." Your insights into the evolving role of AI in radiology are thought-provoking and highlight the need for a paradigm shift in how we approach AI-human collaboration in medical imaging.

The proposed models for role separation, including AI-First Sequential, Doctor-First Sequential, and Case Allocation, offer promising solutions to reduce cognitive bias, enhance efficiency, and improve patient reporting. These models have the potential to revolutionize radiology workflows and patient care.

*Key Takeaways:*

– *Role Separation Models:*

– *AI-First Sequential Model*: AI performs initial steps, such as generating CT requisition histories from clinical notes, allowing radiologists to focus on complex tasks.

– *Doctor-First Sequential Model*: Radiologists interpret images first, and AI contributes to summarizing reports in structured formats or generating patient-friendly outputs.

– *Case Allocation Model*: Cases are directed to AI, humans, or both based on complexity or risk, optimizing workflow and improving diagnosis accuracy.

– *Benefits:*

– Reduced cognitive bias and improved reliability

– Enhanced efficiency and productivity

– Improved patient reporting and earlier diagnosis

To successfully implement these models, it's essential to address the challenges of implementation costs, regulatory uncertainty, variable AI performance, change management, and equity considerations.

*Real-World Applications:*

– AI-powered teleradiology solutions can bridge healthcare gaps in underserved regions.

– AI-assisted reporting can automate preliminary findings and summarize imaging results, reducing radiologists' workload.

– Predictive analytics can forecast disease progression, enabling proactive decision-making and personalized care.

The future of AI in radiology lies in flexible, hybrid configurations that leverage the strengths of both humans and AI. By subjecting AI to a clinical certification pathway and ensuring evidence-based deployment, we can build trust and improve patient outcomes.

Thank you for sharing your expertise and insights on this critical topic. I look forward to exploring more of your work.

Reply